TPCx-V

User's Guide

Last modified 8/30/2015

This document will help you set up one

TPCx-V Tile using Virtual Machines running the following software

configuration.

Operating System: RHEL 7 or CentOS 7

Database: PostgreSQL 9.3

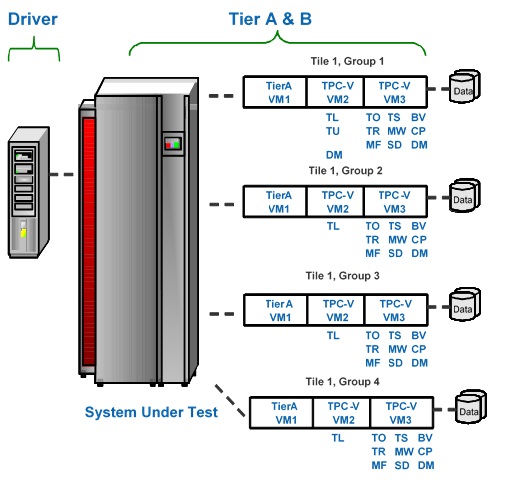

The following figure represents all of the components of the reference

driver and the corresponding network connections between them. This

figure may be a useful visual reference as you work through these

instructions. Note that this figure represents a System Under Test (SUT) with a single-tile test

configuration using only a single driver. For each additional tile, an

additional twelve SUT VMs, potentially additional vmee and vce

processes, and corresponding connections to those SUT VMs and the

vdriver prime driver would be required.

As you can see from the diagram in Section

1.0, a single-tile SUT configuration will consist of 12 different virtual

machines. The instructions in this section will describe how to set up a

single virtual machine. You can either run through the instructions 12

times to set up the various virtual machines or you can set up one Tier A

virtual machine and one Tier B virtual machines using the instructions,

clone those images as needed, and then make the necessary modifications to

the cloned images to customize them for their purpose (unique hostname,

unique ip address, secondary disk devices, etc).

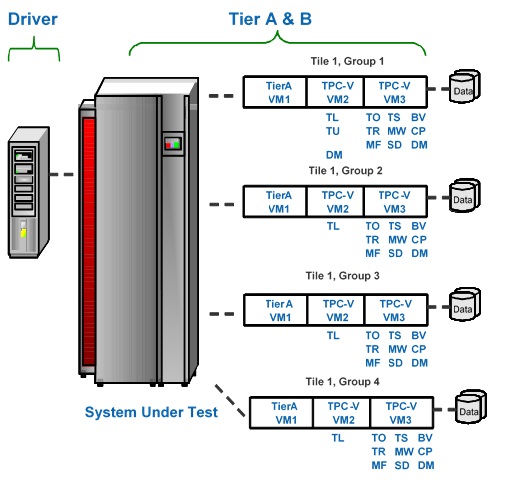

The figure below show a sample 1-Tile SUT configuration, showing the 3 VMs per Tile,

as well as the division of transaction types between the two Tier B VMs.

A preconfigured OVF template is available to download from the TPC website. This template has all the

necessary software installed and configured. The root password of this VM is "tpc-v" (note: no letter "x" in the password). If you have downloaded

and deployed this template, you can skip to section 2.4.1 Clone Virtual Machines

Once the VMs are configured, most of the commands are run as user postgres. When we install the postgres software, we

get a user postgres in /usr/passwords. But it does not have a password, and cannot be directly logged into.

You can give it a password, or log into the VM as root, and then do a su - postgres

Unless otherwise noted, the commands in

sections 2.1 to 2.5.2

are meant to be run as the user root.

Create a virtual machine

with the following minimum characteristics

-

2 virtual cpus

-

4GB memory

-

6GB primary disk device

-

1 network interface

The 6GB of space in the root file system should be enough to install the operating

system and all the software packages. Ordinarily, you should install all the software

and finish configuring the first VM, then clone it into Tier A and Tier B VMs. At that point, you

will need the extra space for the databases in the Tier B VMs.

The Tier B virtual machines will need

additional disk storage for the database, backups, and flat files. The

amount of storage needed will depend on the Group that will be running

in the VM. The VM3 Tier B VMs will require slightly more space than VM2

Tier B VMs. Use the chart below to determine the extra disk space needed.

The primary disk device of Tier B VMs can be sized to include enough space for the

database, backup, and flat files (the flat files may be created on a single VM and nfs-mounted on all other VMs), or other storage devices can be added to

provide the needed size.

|

VM2 Database

Size (GB) |

VM2 Backup

Size (GB) |

VM3 Database

Size (GB) |

VM3 Backup

Size (GB) |

Flat

Files (GB) |

| Group 1 |

37 |

3.8 |

38.5 |

3.8 |

60 |

| Group 2 |

70.5 |

6.9 |

73.5 |

6.9 |

60 |

| Group 3 |

105 |

10 |

110 |

10 |

60 |

| Group 4 |

138 |

14 |

144 |

14 |

60 |

Install the 64-bit,

server version of RHEL 7 or CentOS 7

in the virtual machine on the primary disk device of the VM.

In the "INSTALLATION SUMMARY" window, click on "SOFTWARE SELECTION",

and under "Base Environment", choose "Server with GUI".

Under "Add-Ons for Selected Environment", choose the following:

-

Guest Agents/File and Storage Server + Network File System Client

-

Large Systems Performance

-

Performance Tools

-

Compatibility Libraries

-

Development Tools

Since you will install Java and PostgreSQL 9.3 in

later steps, it is a good idea to

NOT

install the "PostgreSQL Database Server" or "Java Platform" Add-On packages during

the Operating System installation to avoid having two possibly conflicting versions of the

same software present on the VM.

-

Increase the limits in /etc/security/limits.conf

* soft nproc

578528

* hard nproc 578528

* soft memlock 33554432

* hard memlock 33554432

postgres soft nproc 578528

postgres hard nproc 578528

postgres soft memlock 536870912

postgres hard memlock 536870912

-

Add these to /etc/sysctl.conf

kernel.sem =

500 64000 400 512

fs.file-max = 500000

vm.min_free_kbytes = 65536

kernel.shmall = 134217728

kernel.shmmax = 549755813888

kernel.shmmni = 4096

fs.file-max = 6815744

fs.aio-max-nr=1048576

kernel.core_uses_pid = 1

kernel.wake_balance = 0

kernel.perf_event_paranoid = -1

vm.swappiness = 0

- Disable the firewall:

systemctl stop firewalld

systemctl disable firewalld

systemctl stop iptables

systemctl disable iptables

-

We need the Java 7 JDK (the kit hasn't been tested with Java 8). You can use the OpenJDK package that comes

stock with RHEL7.

Alternatively, you can install Java from

Oracle Java,

for example, 7u72 from Oracle Java 7u72

If you want to ensure that you are using the newly installed bits rather than OpenJDK, it is recommended that you change the system defaults (at least /usr/bin/java) to

point to the newly-installed Oracle jdk with the following commands:

update-alternatives --auto java

update-alternatives --install /usr/bin/java java /usr/java/default/bin/java 180051 \

--slave /usr/bin/keytool keytool /usr/java/default/bin/keytool \

--slave /usr/bin/orbd orbd /usr/java/default/bin/orbd \

--slave /usr/bin/pack200 pack200 /usr/java/default/bin/pack200 \

--slave /usr/bin/rmid rmid /usr/java/default/bin/rmid \

--slave /usr/bin/rmiregistry rmiregistry /usr/java/default/bin/rmiregistry \

--slave /usr/bin/servertool servertool /usr/java/default/bin/servertool \

--slave /usr/bin/tnameserv tnameserv /usr/java/default/bin/tnameserv \

--slave /usr/bin/unpack200 unpack200 /usr/java/default/bin/unpack200 \

--slave /usr/lib/jvm/jre jre /usr/java/default/jre \

--slave /usr/share/man/man1/java.1 java.1 /usr/java/default/man/man1/java.1 \

--slave /usr/java/default/man/man1/keytool.1 keytool.1 /usr/java/default/man/man1/keytool.1 \

--slave /usr/share/man/man1/orbd.1 orbd.1 /usr/java/default/man/man1/orbd.1 \

--slave /usr/share/man/man1/pack200.1 pack200.1 /usr/java/default/man/man1/pack200.1 \

--slave /usr/share/man/man1/rmid.1 rmid.1 /usr/java/default/man/man1/rmid.1 \

--slave /usr/share/man/man1/rmiregistry.1 rmiregistry.1 /usr/java/default/man/man1/rmiregistry.1 \

--slave /usr/share/man/man1/servertool.1 servertool.1 /usr/java/default/man/man1/servertool.1 \

--slave /usr/share/man/man1/tnameserv.1 tnameserv.1 /usr/java/default/man/man1/tnameserv.1 \

--slave /usr/share/man/man1/pack200.1 unpack200.1 /usr/java/default/man/man1/unpack200.1

Install and configure PostgreSQL 9.3 in the

virtual machine. The binaries and libraries will be installed

in /usr/pgsql-9.3.

-

Install ODBC by downloading unixODBC-2.3.1-10.el7.x86_64.rpm and unixODBC-devel-2.3.1-10.el7.x86_64.rpm from rpmfind.net:

yum install unixODBC-2.3.1-10.el7.x86_64.rpm unixODBC-devel-2.3.1-10.el7.x86_64.rpm

-

Download PostgreSQL

- Go to yum.postgresql.org/repopackages.php

- Click on the link for version 9.3 for “Red Hat Enterprise Linux 7

– x86_64” or "CentOS 7 - x86_64" depending on which OS you are

using.

- Download the pgdg-redhat93-9.3-1.noarch.rpm or

pgdg-centos93-9.3-1.noarch.rpm file depending on which OS you are

using and put it on the VM in the /root directory

-

Depending on OS, run either:

rpm -ivh

pgdg-redhat93-9.3-1.noarch.rpm

or

rpm -ivh

pgdg-centos93-9.3-1.noarch.rpm

- Get postgresql93-odbc-09.03.0300-1PGDG.rhel7.x86_64.rpm

from postgresql.org

- Run:

rpm -ivh

postgresql93-odbc-09.03.0300-1PGDG.rhel7.x86_64.rpm

-

Run:

yum install postgresql93

postgresql93-libs postgresql93-devel postgresql93-server

postgresql93-odbc

-

You should now have a user

postgres

on the VM; if not, create it. Perform the following steps as user postgres

-

Put the TPCx-V kit tar files xVAudit.tgz, VGen.tgz, VDb.tgz, and VDriver.tgz into

the /opt directory

- Untar the kit files to their target locations

tar xzvf

/opt/VGen.tgz –directory=/opt

tar xzvf /opt/VDB.tgz –directory=/opt

tar xzvf /opt/VDriver.tgz –directory=/opt

tar xzvf /opt/xVAudit.tgz –directory=/opt

chown -R postgres:postgres /opt/VDb /opt/VDriver /opt/VGen /opt/xVAudit

-

Add the following lines to the end of ~postgres/.bash_profile to set

these values for future logins.

Note the setting for PGDATA to /dbstore/tpcv-data, which is used throughout this Guide.

If you want to store the postgres data and configuration files in a different location,

change PGDATA accordingly.

export PGHOME=/usr/pgsql-9.3

export PATH=$PATH:$PGHOME/bin

export PGDATA=/dbstore/tpcv-data

export LD_LIBRARY_PATH=${PGHOME}/lib:/opt/VGen/lib:/usr/local/lib

export JAVA_INC=/usr/java/latest/include

-

We use the pgstatspack package to collect performance data. As user postgres, download the file

pgstatspack_version_2.3.1.tar.gz from pgfoundry.org/,

and simply untar it in postgres home directory

At this point, your VM has all the required software.

You can now clone it 12 (or 24, etc.) times for all the VMs in a TPCx-V configuration,

and customize the Tier A and Tier B VMs by following the instructions below

On the VMware vSphere platforms, you need to install the deployPkg plug-in as

described in KB article 2075048. You can then clone VMs by simply providing the new VM's

network name and IP address.

If you clone the Tier B VMs from a single source, remember to properly resize the virtual disk

that holds the /dbstore file system, and run resize2fs(8) after powering up the VM.

You can use the VDriver/scripts/cloneVMs.ps1 PowerCLI script to clone the VMs ina VMware vSphere environment.

You will need to invest time in intalling VMware PowerCLI on a Windows PC (or VM!), and customizing the script

for your environment. That time will be well-spent as it will automate the cloning process, and will

save a lot of time in the long run.

The run-time bash scripts depend on the prime driver being

able to ssh into all 13 VMs, as both root and postgres, using the file .ssh/authorized_keys.

It's a good idea to set this up on the first VM before making the clones

ssh-keygen # You can simply hit the Return Key in response to all the questions

ssh-copy-id root@nameofmyvm # Use the Network Name of your VM here

ssh-copy-id postgres@nameofmyvm

Test this by logging in as user postgres and running a simple command like "ssh nameofmyvm date".

If it asks for a password, run the following command as root:

restorecon -R -v /root/.ssh

Use the instructions in this section to finish

configuring a VM for use in Tier B. Note that Tier B VMs require additional virtual

disk space per table in section 2.1.1

If a separate file system will be used to store

the postgres data files, create it with:

mkfs.ext4 -F -v -t ext4 -T largefile4 -m 0 -j -O extent -O dir_index

and mount it

as /dbstore

with mount arguments: nofail,noatime,nodiratime,nobarrier

Run the following commands on the Tier B VM:

mkdir -p /dbstore/tpcv-data

mkdir /dbstore/tpcv-index

mkdir /dbstore/tpcv-temp

chown -R postgres:postgres /dbstore

-

Since we have customized the location of PostgreSQL data files to

/dbstore/tpcv-data, we need to let the operating system know where to find these files

-

Create a

/etc/systemd/system/postgresql-9.3.service file with the following contents:

.include /lib/systemd/system/postgresql-9.3.service

Environment=PGDATA=/dbstore/tpcv-data

mkdir /etc/systemd/system/postgresql-9.3.service.d

-

Create a

/etc/systemd/system/postgresql-9.3.service.d/restart.conf file with

the following contents:

[Service]

Environment=PGDATA=/dbstore/tpcv-data

-

Run the following commands on the Tier B VM to set up PostgreSQL:

systemctl enable

postgresql-9.3.service

/usr/pgsql-9.3/bin/postgresql93-setup initdb

systemctl start postgresql-9.3.service

-

Edit the $PGDATA/pg_hba.conf file to make the

connection security levels trust rather than peer and ident.

- Update the postgresql.conf file

($PGDATA/postgresql.conf) to have PostgreSQL listen on

the local ip address and not just localhost. Update (may need to

uncomment) the listen_addresses parameter and add the ip address of the

server.

The following postgresql.conf changes are useful when loading the database

shared_buffers = 1024MB

wal_sync_method = open_datasync

wal_writer_delay = 10ms

checkpoint_segments = 30

checkpoint_completion_target = 0.9

- Restart the PostgreSQL service so the changes will take affect

systemctl restart postgresql-9.3.service

Run this step as user postgres.

All Tier B VMs use the same flat files for populating the databases, even in a multi-Tile configuration. Each Group 1/2/3/4 loads some or all the flat files.

So there is no need to generate identical flat files on

all 8 database VMs and waste disk space. The 8 database VMs can nfs-mount /vgenstore

from one VM that has generated the flat files.

To create the file system for the flat files, run the following command as user root:

mkfs.ext4 -F -v -t ext4 -T largefile4 -m 0 -j -O extent -O dir_index

Mount the new file system as /vgenstore

chown postgres:postgres /vgenstore

Note: once the file system is created and mounted, all the rest of the following commands are executed

as user postgres.

Build the VGen binaries by executing the

following commands:

cd /opt/VGen/prj

cd GNUMake

make

cd ../..

The space for the flat files (60GB for the minimum-sized database)

can be anywhere as long as all Tier B VMs can access it, and as long as the

"-o" argument to VGenLoader and the parameter flatfile_dir in VDb/pgsql/scripts/linux/env.sh

are the same path. Here we assume the flat files are in /vgenstore/flat_out

Run the following commands (which are in the script /opt/VGen/scripts/create_TPCx-V_flat_files.sh)

to create one set of flat files that can be used for all TPCx-V Tiles and Groups.

#!/bin/bash

cd /opt/VGen

mkdir -p /vgenstore/flat_out/Fixed

mkdir -p /vgenstore/flat_out/Scaling1

mkdir -p /vgenstore/flat_out/Scaling2

mkdir -p /vgenstore/flat_out/Scaling3

mkdir -p /vgenstore/flat_out/Scaling4

bin/VGenLoader -xf -c 20000 -t 20000 -w 125 -o /vgenstore/flat_out/Fixed

&

bin/VGenLoader -xd -b 1 -c 5000 -t 20000 -w 125 -o

/vgenstore/flat_out/Scaling1 &

bin/VGenLoader -xd -b 5001 -c 5000 -t 20000 -w 125 -o

/vgenstore/flat_out/Scaling2 &

bin/VGenLoader -xd -b 10001 -c 5000 -t 20000 -w 125 -o

/vgenstore/flat_out/Scaling3 &

bin/VGenLoader -xd -b 15001 -c 5000 -t 20000 -w 125 -o

/vgenstore/flat_out/Scaling4 &

wait

Flat files created with the above script

creates flat files for all four Groups with the minimum customer counts

of

Group A: 5000

Group B: 10000

Group C: 15000

Group D: 20000

and uses 60GB of disk space.

The database and backup sizes of the various

groups of this size:

Group A: 35GB DB, 3.8GB backup (22 minutes to

load, 17 minutes to create the backup)

(16 minute restore from/to flash)

Group B: 67GB DB, 6.9GB backup (48 minutes to load, 29 minutes to

create the backup)

Group C: 100GB DB, 10GB backup (70 minutes to load, 40 minutes to

create the backup)

Group D: 131GB DB, 14GB backup (98 minutes to load, 53 minutes to

create the backup)

-

Make sure the parameter flatfile_dir in /opt/VDb/pgsql/scripts/linux/env.sh points to where the

flat files were loaded. Change the parameter scaling_tables_dirs in

/opt/VDb/pgsql/scripts/Linux/env.sh to "/Scaling[1-1]", "/Scaling[1-2]", and "/Scaling[1-3]" for

G1, G2, and G3 VMs, respectively.

-

On each Tier B VM, run the following to populate and set up the TPCx-V database:

cd /opt/VDb/pgsql/scripts/linux

./setup.sh

This phase will take about one hour when

loading a default set of flat files.

You will some errors when we create the objects role and language, which already exist,

or when we try to drop objects (tables and indexes) that were gone when we dropped the

database. These errors are benign.

On each Tier B VM, run the following commands:

rm -rf /dbstore/backup; mkdir /dbstore/backup; pg_dump -j 4 -Fd tpcv -f /dbstore/backup -Z 9

On each Tier B VM, run the following commands:

pg_restore -j 4 -c --disable-triggers -d tpcv /dbstore/backup; cd /opt/VDb/pgsql/scripts/linux; ./analyze.sh

Use the instructions in this section to finish

configuring a VM for use in Tier A

Run this step as user root.

This section only needs to be done on the Tier

A virtual machines.

-

Populate the odbc.ini file from the kit

cat

/opt/VDb/pgsql/prj/osfiles/odbc.ini >> /etc/odbc.ini

-

Edit /etc/odbcinst.ini and set

Driver64=/usr/pgsql-9.3/lib/psqlodbcw.so

-

Edit /etc/odbc.ini and set ServerName to the name of the two Tier B

VMs that serve this Tier A. Note that you need to create two

Data Sources,

one that points to VM2, and one that points to VM3. You can name these Data Sources anything that you

want as long as they match VCONN_DSN_LABELS in vcfg.properties; PSQL2 and PSQL3 are good choices.

-

Test out the TPCx-V and ODBC connections. First let's make sure the Tier B VM is reachable on the network, and the

database is up and running. Do this by setting

PGHOST to the network name of a Tier B VM, then running

a simple database query from the Tier A, such as the following

command with its expected output:

-bash-4.2$ export PGHOST=THE_NETWORK_NAME_OF_YOUR_TIERB

-bash-4.2$ psql tpcv -c "select count(*) from sector"

count

-------

12

(1 row)

Now, check that we can reach the same database via ODBC. Do this by using the tradestatus test application in

/opt/VDb/pgsql/dml/test_programs,

which connects to the database on the datasource name PSQL1 (if you have used PSQL2 and PSQL3 in

/etc/odbc.ini, change replace the PSQL1 string with PSQL2 or PSQL3, and re-make the application).

cd /opt/VDb/pgsql/dml/test_programs

make tradestatus

./tradestatus

Run this step as user postgres.

These instructions assume you have already

used Section 2 of this document to set up four Tier A VMs, each connected

to two Tier B database VMs, for a total of 12 VMs.

Optionally, you can create a 13th clone to be the prime driver. Although this is not

required, if done, it will simplify the process since the prime driver

will immediately have all the required software installed.

These instructions are intended to assist the user in setting up the TPCx-V

prime driver, all subordinate drivers, market exchange emulators, and

database connectors used to generate the database load. They also assume

you have read the TPCx-V Reference Driver Design Document and are familiar

with concepts and terminology introduced there.

The following build instructions assume you

are building the driver on Linux using GNU C and the Oracle Java

Development Kit. Other environments may use different build scripts and

build steps, though the fundamental build process should be very similar.

- Copy the reference driver kit file(s) first to your prime driver in

the desired directory.

- If a Java Development Kit has not already been installed, install

one on the prime driver in the desired directory. (Adding a soft link

called "java" in the /usr directory that points to the Java

development kit's base directory will reduce the editing requirements

in the Makefile.)

- From the base directory of the reference driver kit on the prime

driver, go to the VDriver/prj/GNUMake directory.

- Modify the included Makefile as appropriate for your environment.

Most fields should require no modification. However, be certain that

JAVA_HOME points to the correct base directory for your Java

development kit (JDK). Likewise, confirm that the C++ compiler and

flags are correct for your environment.

- Run "make" from the GNUMake directory to build the reference driver.

When the reference driver has built successfully, you should find a file

named "vdriver.zip" in the /opt/VDriver/dist directory.

The vdriver.zip file contains all of the

necessary reference driver files. You can copy the zip file to the desired

directory on each Tier A SUT VM, as well as to each system that will be

used as a CE driver and Market Exchange Emulator, and extract all files to

each system. For example, copy

vdriver.zip from the /opt/VDriver/dist

directory of the prime driver to the /opt/VDriver/dist directory of each of the

12 VMs, then on each VM run the following commands:

cd /opt/VDriver/jar

unzip -o ../dist/vdriver.zip -x vcfg.properties

However, the following instructions detail how to copy only

the necessary files for each component.

- Unzip vdriver.zip on the prime driver (or any other system from

which you can copy the extracted files to all of the target systems).

- Copy vconnector.jar and the "lib" directory to the desired

directory on all Tier A VMs.

- Copy vmee.jar and the "lib" directory to the desired directory on

all systems that will be used as market exchange emulators.

- Copy vce.jar and the "lib" directory to the desired directory on all

systems that will be used as customer emulators.

- If the system on which vdriver.zip was extracted is not the system

that will be used as the prime driver or is not in the desired

location on the prime driver, then copy vdriver.jar, reporter.jar, the

"lib" directory, vcfg.properties, and testbed.properties to the

desired directory on the prime driver.

Finally, confirm that you have a Java runtime environment (JRE) or

development kit (JDK) installed on all systems that will be running these

reference driver components, and that the installed version is the same

major revision level or newer than the one used to build the reference

driver.

The configuration file (vcfg.properties) is

where the benchmark run configuration is defined. This section deals only

with the most commonly modified parameters. See Appendix A for details of

configuration parameters not explained here.

VM_TILES

Set this to the number of Tiles being used for the test.

(These setup instructions assume a single-Tile configuration.)

NUM_DRIVER_HOSTS

Set this value to the number of CE driver processes used in a test.

NUM_CE_DRIVERS

NUM_CE_DRIVERS[x]

Modify NUM_CE_DRIVERS in order to change the total number of CE driver threads

used to generate load in a test. Note that these threads are evenly

distributed between all CE driver processes. In order to override this

default distribution of threads, use the indexed version to specify how

many driver threads are run in each driver process.

NUM_MKT_EXCHANGES

Set this value to the number of Tiles of Tier A VMs. There is a

single MEE process for each set of four Tier A VMs.

VDRIVER_RMI_HOST

VDRIVER_RMI_PORT

Set these values to the network interface hostname and port on which the

prime driver will listen for RMI commands.

VCE_RMI_HOST[x]

VCE_RMI_PORT[x]

Set these values to the network interface hostname and port on which

each vce client process will listen for RMI commands. Note that any vce

processes not defined in this way will default to the value assigned to

the non-indexed form of these parameters.

VCONN_RMI_HOST[tile][group]

VCONN_RMI_PORT[tile][group]

Set these values to the network interface hostname and port on which

each vconnector process will listen for RMI commands. Note that any

vconnector processes not defined in this way will default to the value

assigned to the non-indexed form of these parameters.

VCONN_TXN_HOST[tile][group]

VCONN_TXN_PORT[tile][group]

Set these values to the network interface hostname and port on which

each vconnector process will accept transactions from the vce or vmee

processes. Note that any vconnector processes not defined in this way

will default to the value assigned to the non-indexed form of these

parameters.

VCONN_MEE_INDEX[tile][group]

Set this to the index of the vmee process used for that set of Tier A

VMs. Note that this value must be identical for all vconnector processes

in the same Tile. Effectively, this value corresponds to the Tile index

for a group of four Tier A VMs.

VCONN_DSN_LABELS[tile][group]]

Set this to the label strings for the databases used, as defined in your

Tier A VM's odbc.ini file, with each label delimited by a comma. There

must be as many label names as Tier B databases in use.

VCONN_NUM_DBS[tile][group]

Set this to the number of databases being used by the Tier A VM of the

same Tile and Group indexes.

MEE_RMI_HOST[tile]

MEE_RMI_PORT[tile]

Set these values to the network interface hostname and port on which

each vmee process will listen for RMI commands. Note that any vmee

processes not defined in this way will default to the value assigned to

the non-indexed form of these parameters.

MEE_TXN_HOST[tile][group]

MEE_TXN_PORT[tile][group]

Set these values to the network interface hostname and port on which

each vmee process will accept transactions from VGen. Four of these pairs must be defined; one for

each Tier A VM in each Group. Any vmee processes used but not defined in

this way will default to the value assigned to the non-indexed form of

these parameters.

RUN_ITERATION_SEC

Set this value to the number of seconds that a measurement interval to

run. (Modifying this value from the default will result in a

non-compliant run.)

WARMUP_SEC

RAMPUP_SEC

RAMPDN_SEC

Set these values to the number of seconds of ramp-up and ramp-down at

the beginning and end of an entire test run, and the number of seconds

of warm-up at the beginning of the first iteration of the test

run.

NUM_RUN_PHASES

Set this to the number of run phases you wish to run in a single

measurement interval. There must be a GROUP_PCT_DIST_PHASE defined for

as many run phases as specified. (Modifying this value from the default

will result in a non-compliant run.)

NUM_RUN_ITERATIONS

Set this to the number of times to run a full set of run phases.

Run this step as user postgres.

There are a number of shell scripts in the directory /opt/VDriver/scripts/rhel6 that

facilitate the running of a test and post processing the results.

runme.sh is the main script. It starts the component processes on

all 13 VMs, runs the test, post processes the logs to produce results all the

way to a TPCx-V Executive Summary, and can optionally collect stats, run the consistency tests,

and run the Trade-Cleanup

transaction before and after the run. The results of each run are saved in the directory

/opt/VDriver/results/RUN_ID

with a unique RUN_ID for each run.

iostat_summary.sh averages the iostat output of each VM over the

Measurement Interval, and prints a line for each database (virtual) disk of each Tier B VM.

killrun.sh logs into all

13 VMs, and kills the benchmark processes. Note that killrun.sh gets the network names of the VMs

for a given run from the file /opt/VDriver/results/RUN_ID/allhosts, which is

created by runme.sh. If for some reason this file is not created (e.g., you hit CTRL-C before runme.sh

had a chance to create allhosts; or because of syntax issues in vcfg.properties),

killrun.sh won't work, and you will need to manually log into each VM, and kill the benchmark processes.

summary.sh gives a 1-line summary of the performance for a given run number.

trade-cleanup.sh runs the Trade-Cleanup transaction for the last completed run.

Alternatively, the "-t" option to runme.sh will automatically run Trade-Cleanup after every run.

collect_config.sh, tools.sh, finish_tools.sh,

killlocal.sh, and run_consistency.sh

are auxiliary bash scripts that get invoked by other scripts.

Run this step as user postgres.

If instead of using the supplied bash scripts, you prefer to manually

launch individual component processes, follow the instructions below.

As the diagram, above, illustrates, the

vconnector, vmee, and vce processes all receive RMI calls from the prime

driver, and as such, the hostname of the network interface on which the

user intends to communicate with the prime driver must be specified when

invoking these processes. Likewise, unless the user intends to listen on

the default RMI network port for these RMI calls, the network port must

also be specified when invoking these processes.

Go to the directory in which the vconnector

jar file is installed on each Tier A VM and start each vconnector process

using the following invocation string format:

java -jar

[-Djava.library.path=<path_to_c_libs>] vconnector.jar -rh

<hostname> [-rp <port_num>]

An example invocation string:

java -jar -Djava.library.path=lib vconnector.jar

-rh tpcv_vm1 -rp 30000

Go to the directory in which the vmee jar

file is installed on each system being used as a market exchange emulator

and start each vmee process using the following invocation string format:

java -jar

[-Djava.library.path=<path_to_c_libs>] vmee.jar -rh

<hostname> [-rp <port_num>]

An example invocation string:

java -jar -Djava.library.path=lib vmee.jar -rh

tpcv_mee1 -rp 32000

Be certain to specify different RMI ports for each vmee process on the

same system and using the same network interface.

Go to the directory in which the vce jar

file is installed on each system being used as a customer emulator and

start each vce process using the following invocation string format:

java -jar

[-Djava.library.path=<path_to_c_libs>] vce.jar -rh

<hostname> [-rp <port_num>]

An example invocation string:

java -jar -Djava.library.path=lib vce.jar -rh

tpcv_ce1 -rp 31000

Be certain to specify different RMI ports for each vce process on the same

system and using the same network interface.

Once all of these driver components are started, they are listening on

their RMI ports and waiting for the prime driver to send them the needed

configuration information and to start the test run.

After confirming the values in the

configuration file are complete and correct for the desired run

characteristics, go to the directory in which the vdriver jar file is

installed on the prime driver and start the vdriver process using the

following invocation string format:

java -jar

[-Djava.library.path=<path_to_c_libs>] vdriver.jar -i

<flat_file_location>

An example invocation string:

java -jar -Djava.library.path=lib vdriver.jar -i

/opt/tpcv/VGen/flat_in

This will start the test run, and all vce, vmee, and vconnector

processes, as well as the vdriver process should terminate at the end of

the run.

Run this step as user postgres.

The runme.sh script will automatically invoke the reporter.jar

application multiple times to sort the log files, merge them into a single log file,

produce an ASCII file with the required performance and compliance data, and finally

generate an Executive Summary.

Alternatively, you can follow the instructions below to invoke reporter.jar

to post process the logs.

During the measurement interval, each vce

and vmee process records the response time for each request type on a

per-phase basis in a transaction mix log. At the end of the run,

the log files from all of these vce and vmee processes must be pre-sorted

and then merged into a single mix log file using the reporter. (Note that

if you set SORT_MIX_LOGS = "1" in vcfg.properties, the vce and vmee

processes will sort their log files by timestamp at the end of the run.

Otherwise, it must be done by the reporter.)

The following instructions assume you have collected all of the vce and

vmee log files and placed them in the same directory as the reporter.jar

file. They also assume that there is a "results" subdirectory that

includes the timestamped subdirectories for each run, and in which

subdirectory exists a runtime.properties file that includes the specific

configuration information for the run that the reporter is intended to

process.

There are four flags that you may need to use when invoking the

reporter:

- -s: this flag tells the reporter that the file or files that it

will process need to be sorted by timestamp

- -m: this flag tells the reporter to merge all sorted mix

files into a single, time-sorted file

- -r: this flag tells the reporter to write a final transaction rate

report based on the log entries from the single, merged,

time-sorted file

- -es: this flag tells the reporter to write an HTML-formatted Executive

Summary report based on the log entries from the single,

merged, time-sorted file as well as the information contained in the

testbed.properties file

If you pass the reporter none of these flags, it expects that you have

also passed it the name of a single, sorted, merged log file that only

requires writing a final transaction rate report. Otherwise, you should

pass it the combination of these four flags that reflects the tasks you

would like the reporter to perform.

The reporter process is invoked using the following format:

java -jar

[-Djava.library.path=<path_to_c_libs>] reporter.jar -c

<path_to_runtime.properties> [-t

<path_to_testbed.properties>] [-h] [-s ] [-m] [-r] [-es] [-o

<merged_log_name/path>] [-i

<desired_sample_interval_seconds>] [-si

<measurement_starting_iteration>] [-sp

<measurement_starting_phase>] [-mp

<total_measurement_phases>] <mix_log_file_name(s)>

In order to process the mix log files from the CE and MEE processes,

these files have to be sorted (by timestamp), and then merged. The

following is an example reporter invocation string that might be used

for sorting a single mix log file:

java -jar -Djava.library.path=lib reporter.jar -c

results/20131118-142511/runtime.properties -s CE_Mix-0.log

This would create a new log file named CE_Mix-0-sorted.log that is

ready to be merged with any other pre-sorted logs from the same run.

Once all of your CE and MEE mix logs are sorted, they are ready to be

merged into a single, combined mix log file. The following is an example

reporter invocation string that might be used for merging multiple mix

log files:

java -jar -Djava.library.path=lib reporter.jar -c

results/20131118-142511/runtime.properties -m -o mixlog-merged.log

CE_Mix-0-sorted.log CE_Mix-1-sorted.log MEE_Mix-0-sorted.log

MEE_Mix-1-sorted.log

This would merge the four (pre-sorted) mix logs into a single sorted and

merged log file named mixlog-merged.log. (A single, combined mix log

file is required for creating the raw performance summary log file as

well as an HTML-formatted Executive Summary document.)

Once you have a single sorted and merged log file to use as input, you

can then create a performance summary log file by using a reporter

invocation string similar to the following:

java -jar -Djava.library.path=lib reporter.jar -c

results/20131118-142511/runtime.properties -i 30 mixlog-merged.log

Note that the "-i 30" was not actually required because 30 seconds is

the default time sampling interval. Additionally, the "-r" flag was not

used in this invocation. That flag is only needed when including the

performance summary log file creation with the previous merging step.

But if you provide the reporter with a single, pre-sorted and pre-merged

mix log file, the creation of this performance log will occur with or

without this flag's inclusion in the invocation string. Note also that

the reporter will try to write this performance summary log to the same

directory as the directory in which the driver wrote the

runtime.properties file.

The HTML-formatted Executive Summary is created using a similar format

to that of the performance summary log, above. In fact, the performance

summary log is created as a part of the Executive Summary creation,

making it unnecessary to create the former prior to creating the

Executive Summary pages. However, the Executive Summary requires

additional details about the hardware and software configuration used

for the test. So the user must also fill out the testbed.properties file

and pass that file's name and location to the reporter when invoking it

to create an Executive Summary. The reporter invocation string would

look similar to the following in format:

java -jar -Djava.library.path=lib reporter.jar -c

results/20131118-142511/runtime.properties -t testbed.properties

mixlog-merged.log

If unsure how to fill out the testbed.properties file, the user can

first invoke the reporter using the sample testbed.properties file

provided with the kit, which should help clarify where and how these

properties are being used in the report.

Up to here, all of the reporter instructions have implicitly been

assuming that the test that was run was a single iteration of ten

phases. However, neither the benchmark kit nor the reporter is so

limited. If the user runs multiple iterations of the 10-phase test, any

ten consecutive phases may be selected as the measurement interval to

use for the report (or even greater or fewer than ten phases for a

non-compliant test).

To use this reporter functionality the user will include one or more

of the following flags when invoking the reporter:

- -si <starting_iteration>: This flag is used to specify the

iteration that is the beginning of the measurement interval chosen.

(Default: 0)

- -sp <starting_phase>: This flag is used to specify the phase

number that is to be the first phase of the measurement interval

chosen. (Default: 0)

- -mp <total_phases>: This flag is used to specify the total

number of phases that are to be included in the measurement interval.

(Default: 10; note that any number other than 10 will make this

measurement interval non-compliant)

As an example, assume that a test is run with two iterations of ten

phases, and it seems as though the user may have used too short of a

warmup time, because prior to the first phase of the first iteration the

log data suggests that the system has still not reached a steady state

condition. So the user wants to create a report starting at the second

phase of the first iteration and continue through the first phase of the

second iteration. The reporter invocation would look similar to:

java -jar -Djava.library.path=lib reporter.jar -c

results/20131118-142511/runtime.properties -t testbed.properties -si 0

-sp 1 mixlog-merged.log

Note that the -mp flag was not included because the default value is

being used (and must be used for a compliant run). Similarly, because

the default starting interval is also 0, the -si flag could have been

left out, as well.

Packaged with the kit, there is a simple vcfg.properties file. This configuration has a single VM that

contains the driver, as well as the Tier A and the single database that handles all the Tier B transactions. This file

specifies a short run of 5 mintues with no Phase changes.

There is also a vcfg.properties.5-tile file for a 5-Tile, 60-VM (plus a separate driver) configuration.

This file specifies a 2-hour run with 10 Phases.

###############################################################################

#

# VM Configuration

#

# The specification defines 1 to 6 Tiles. Each Tile contans 4 Groups.

# Each Group contains 3 VMs

#

VM_GROUPS = "4"

VM_TILES = "1"

#

###############################################################################

###############################################################################

#

# Runtime Configuration

#

# RUN_ITERATION_SEC is the total runtime for all load phases. This value is

# divided by the number of phases to determine the run duration for each phase.

# RAMPUP_SEC is the number of seconds to ramp up the number of CE processes

# WARMUP_SEC is the number of seconds to run before entering the first

# measurement phase

# RAMPDN_SEC is the number of seconds to ramp down the load at the end of the

# final measurement phase before terminating the run.

RUN_ITERATION_SEC = "600"

RAMPUP_SEC = "60"

WARMUP_SEC = "60"

RAMPDN_SEC = "60"

#

# RAMP_SLEEP_FACTOR controls the ramp-up and ramp-down transaction rate by

# adding a sleep delay between transactions. Set this value to "0" to disable.

# The best value for a linear ramp depends on the test configuration, but a

# value of "300" is likely a good starting point. Increase this value if the

# transaction rate ramps too steeply at the end of ramp-up; decrease if it ramps

# too steeply at the beginning of ramp-up.

RAMP_SLEEP_FACTOR = "0"

TIME_SYNC_TOLERANCE_MSEC = "1000"

VCE_POLL_PER_PHASE = "0"

# NUM_RUN_ITERATIONS is the number of times to run a full set of all load phases

# NUM_RUN_PHASES is the number of load phases in a single run iteration

NUM_RUN_ITERATIONS = "1"

NUM_RUN_PHASES = "1"

#

###############################################################################

###############################################################################

#

# VDriver Configuration

#

# VDriver (prime) hostname and RMI listening port

VDRIVER_RMI_HOST = "localhost"

VDRIVER_RMI_PORT = "30000"

#

###############################################################################

###############################################################################

#

# VCe Configuration

#

# NUM_DRIVER_HOSTS is the number of CE *processes* (i.e. how many invocations

# of vce.jar) that you are using to drive load against the SUT.

NUM_DRIVER_HOSTS = "1"

# NUM_CE_DRIVERS is the total number of CE (threads) that you want to drive load

# against the SUT VMs. If you are using multiple DRIVER_HOSTS, you can specify

# the number of CEs to start on each host by using the indexed version of this

# key. Otherwise, the CEs per host are distributed evenly between hosts.

NUM_CE_DRIVERS = "1"

# Indexes for DRIVER_HOST (in NUM_CE_DRIVERS) start from 0

#NUM_CE_DRIVERS[0] = "2"

#

# Default and index-specific VCe driver hostnames and ports for RMI

# communication between processes (These let the VDriver process know where to

# contact the VCE processes to send benchmark control commands). There must be

# one host/port pair combination for each NUM_DRIVER_HOSTS (additional entries

# are ignored).

VCE_RMI_HOST = "localhost"

VCE_RMI_PORT = "31000"

# Indexes for VCE start from 0

VCE_RMI_HOST[0] = "localhost"

VCE_RMI_PORT[0] = "31000"

VCE_RMI_HOST[1] = "localhost"

VCE_RMI_PORT[1] = "31001"

VCE_RMI_HOST[2] = "localhost"

VCE_RMI_PORT[2] = "31002"

#

# CE log file names

CE_MIX_LOG = "CE_Mix.log"

CE_ERR_LOG = "CE_Error.log"

# CE_EXIT_DELAY_SEC is the number of seconds the user wants to wait to allow

# "cleanup" before final exit. This is mostly in case there are "retries" going

# on that need to have time to time out before a final exit.

CE_EXIT_DELAY_SEC = "10"

#

###############################################################################

###############################################################################

#

# VMEE Configuration

#

# The number of VMEE processes the VDriver should talk to.

NUM_VMEE_PROCESSES = "1"

# These settings specify the host name and port number a given VMEE is

# listening on. vDriver will use these to connect to the VMEE processes. The

# values specified here must match those used on the VMEE command line

# (-rh and -rp) when starting a given VMEE process.

#

# Unindexed value - used as a default if a given indexed value is not specified.

MEE_RMI_HOST = "localhost"

MEE_RMI_PORT = "32000"

#

# Indexed values (0 to (NUM_VMEE_PROCESSES - 1) will be used if they exist).

MEE_RMI_HOST[0] = "localhost"

MEE_RMI_PORT[0] = "32000"

#

#MEE_RMI_HOST[1] = "localhost"

#MEE_RMI_PORT[1] = "32001"

#

#MEE_RMI_HOST[2] = "localhost"

#MEE_RMI_PORT[2] = "32002"

#

#MEE_RMI_HOST[3] = "localhost"

#MEE_RMI_PORT[3] = "32003"

# Each VM TILE requires an MEE.

# Each VMEE process can manage 1 or more MEEs.

# MEEs are assigned in a round-robin manner across the available VMEEs.

# The following policies are supported.

#

# GroupMajor - is a group-major tile-minor distribution. For example:

# Group 1 Tile 1

# Group 1 Tile 2

# Group 1 Tile 3

# Group 1 Tile 4

# Group 2 Tile 1

# Group 2 Tile 2

# Group 2 Tile 3

# Group 2 Tile 4

# ...

#

# TileMajor - is a tile-major group-minor distribution. For example:

# Group 1 Tile 1

# Group 2 Tile 1

# Group 3 Tile 1

# Group 4 Tile 1

# Group 1 Tile 2

# Group 2 Tile 2

# Group 3 Tile 2

# Group 4 Tile 2

# ...

#

MEE_DISTRIBUTION_POLICY = "TileMajor"

# These settings specify individual MEE configuration options.

#

# MEE_TXN_HOST - host name the MEE will listen on (for connections from SUT

# SendToMarket)

# MEE_TXN_PORT - port number the MEE will listen on (for connections from SUT

# SendToMarket)

# MEE_MF_POOL - Size of the Market-Feed thread pool (should be 1 for TPCx-V)

# MEE_TR_POOL - Size of the Trade-Result thread pool (adjust this based on load)

#

# Unindexed value - used as a default if a given indexed value is not specified.

MEE_TXN_HOST = "localhost"

MEE_TXN_PORT = "30100"

MEE_MF_POOL = "1"

MEE_TR_POOL = "1"

#

# (Indexed values will be used if they exist. Add more entries for additional

# tiles.)

#

# Tile 1 Group 1

MEE_TXN_HOST[1][1] = "localhost"

MEE_TXN_PORT[1][1] = "30100"

MEE_MF_POOL[1][1] = "1"

MEE_TR_POOL[1][1] = "1"

# Tile 1 Group 2

MEE_TXN_HOST[1][2] = "localhost"

MEE_TXN_PORT[1][2] = "30101"

MEE_MF_POOL[1][2] = "1"

MEE_TR_POOL[1][2] = "1"

# Tile 1 Group 3

MEE_TXN_HOST[1][3] = "localhost"

MEE_TXN_PORT[1][3] = "30102"

MEE_MF_POOL[1][3] = "1"

MEE_TR_POOL[1][3] = "1"

# Tile 1 Group 4

MEE_TXN_HOST[1][4] = "localhost"

MEE_TXN_PORT[1][4] = "30103"

MEE_MF_POOL[1][4] = "1"

MEE_TR_POOL[1][4] = "1"

# These are used as base file names for logging purposes. Group and Tile IDs

# will be appended to these base names along with a ".log" suffix to make

# unique file names for each MEE.

MEE_LOG = "MEE_Msg"

MEE_MIX_LOG = "MEE_Mix"

MEE_ERR_LOG = "MEE_Err"

#

###############################################################################

###############################################################################

#

# VConnector Configuration

#

# Number of times to retry a failed DB transaction before reporting failure

NUM_TXN_RETRIES = "5"

# Default and index-specific VConnector hostnames and ports

VCONN_RMI_HOST = "localhost"

VCONN_RMI_PORT = "33000"

VCONN_TXN_HOST = "localhost"

VCONN_TXN_PORT = "43000"

VCONN_DSN_LABELS = "PSQL1"

VCONN_NUM_DBS = "1"

# Index-specific hostnames and ports. Add more entries for additional tiles.

# Tile 1 Group 1

VCONN_RMI_HOST[1][1] = "localhost"

VCONN_RMI_PORT[1][1] = "33000"

VCONN_TXN_HOST[1][1] = "localhost"

VCONN_TXN_PORT[1][1] = "43000"

VCONN_DSN_LABELS[1][1] = "PSQL1"

VCONN_NUM_DBS[1][1] = "1"

# Tile 1 Group 2

VCONN_RMI_HOST[1][2] = "localhost"

VCONN_RMI_PORT[1][2] = "33001"

VCONN_TXN_HOST[1][2] = "localhost"

VCONN_TXN_PORT[1][2] = "43001"

VCONN_DSN_LABELS[1][2] = "PSQL1"

VCONN_NUM_DBS[1][2] = "1"

# Tile 1 Group 3

VCONN_RMI_HOST[1][3] = "localhost"

VCONN_RMI_PORT[1][3] = "33002"

VCONN_TXN_HOST[1][3] = "localhost"

VCONN_TXN_PORT[1][3] = "43002"

VCONN_DSN_LABELS[1][3] = "PSQL1"

VCONN_NUM_DBS[1][3] = "1"

# Tile 1 Group 4

VCONN_RMI_HOST[1][4] = "localhost"

VCONN_RMI_PORT[1][4] = "33003"

VCONN_TXN_HOST[1][4] = "localhost"

VCONN_TXN_PORT[1][4] = "43003"

VCONN_DSN_LABELS[1][4] = "PSQL1"

VCONN_NUM_DBS[1][4] = "1"

#

###############################################################################

###############################################################################

#

# Group-specific Load Configuration

#

# Default values

CUST_CONFIGURED = "5000"

CUST_ACTIVE = "5000"

SCALE_FACTOR = "500"

LOAD_RATE = "2000"

INIT_TRADE_DAYS = "125"

# Group-specific values

CUST_CONFIGURED[1] = "5000"

CUST_ACTIVE[1] = "5000"

SCALE_FACTOR[1] = "500"

LOAD_RATE[1] = "2000"

INIT_TRADE_DAYS[1] = "125"

#

CUST_CONFIGURED[2] = "5000"

CUST_ACTIVE[2] = "5000"

SCALE_FACTOR[2] = "500"

LOAD_RATE[2] = "2000"

INIT_TRADE_DAYS[2] = "125"

#

CUST_CONFIGURED[3] = "5000"

CUST_ACTIVE[3] = "5000"

SCALE_FACTOR[3] = "500"

LOAD_RATE[3] = "2000"

INIT_TRADE_DAYS[3] = "125"

#

CUST_CONFIGURED[4] = "5000"

CUST_ACTIVE[4] = "5000"

SCALE_FACTOR[4] = "500"

LOAD_RATE[4] = "2000"

INIT_TRADE_DAYS[4] = "125"

#

#GROUP_PCT_DIST_PHASE[1] = "1.0"

GROUP_PCT_DIST_PHASE[1] = "0.10,0.20,0.30,0.40"

GROUP_PCT_DIST_PHASE[2] = "0.05,0.10,0.25,0.60"

GROUP_PCT_DIST_PHASE[3] = "0.10,0.05,0.20,0.65"

GROUP_PCT_DIST_PHASE[4] = "0.05,0.10,0.05,0.80"

GROUP_PCT_DIST_PHASE[5] = "0.10,0.05,0.30,0.55"

GROUP_PCT_DIST_PHASE[6] = "0.05,0.35,0.20,0.40"

GROUP_PCT_DIST_PHASE[7] = "0.35,0.25,0.15,0.25"

GROUP_PCT_DIST_PHASE[8] = "0.05,0.65,0.20,0.10"

GROUP_PCT_DIST_PHASE[9] = "0.10,0.15,0.70,0.05"

GROUP_PCT_DIST_PHASE[10] = "0.05,0.10,0.65,0.20"

# Use DB_CONN_BUFFER_PCT_GROUP to modify the initial number of connections

# opened by the CEs to each Tier A VM for each group. Use values greater than

# 1.0 to increase the number of connections (up to the theoretical maximum) and

# values less than 1.0 to decrease the number of initial connections.

DB_CONN_BUFFER_PCT_GROUP[1] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[2] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[3] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[4] = "1.0"

#

###############################################################################

###############################################################################

#

# Misc Configuration Parameters

#

RESULT_DIR = "results"

LOG_DIR = "."

SORT_MIX_LOGS = "0"

SORTED_LOG_NAME_APPEND = "sorted"

LOG_SAMPLE_SEC = "30"

# VGEN_INPUT_FILE_DIR = ""

DEBUG_LEVEL = "0"

SUPPRESS_WARNINGS = "1"

FORCE_COMPLIANT_TX_RATE = "0"

CHECK_TIME_SYNC = "0"

COLLECT_CLIENT_LOGS = "0"

NUM_TXN_TYPES = "12"

# NUM_RESP_METRICS are the response-related metrics:

# 0 - success count

# 1 - success msec

# 2 - fail count

# 3 - fail msec

NUM_RESP_METRICS = "4"

# NUM_TXN_METRICS is the number of metrics created for report purposes

NUM_TXN_METRICS = "5"

TXN_METRIC[0] = "SUCCESS COUNT"

TXN_METRIC[1] = "FAIL COUNT"

TXN_METRIC[2] = "AGG RESP TIME"

TXN_METRIC[3] = "MIN RESP TIME"

TXN_METRIC[4] = "MAX RESP TIME"

CE_MIX_PARAM_INDEX = "0,1"

# BrokerVolumeMixLevel,CustomerPositionMixLevel,

# MarketWatchMixLevel,SecurityDetailMixLevel,

# TradeLookupMixLevel,TradeOrderMixLevel,

# TradeStatusMixLevel,TradeUpdateMixLevel

#CE_MIX_PARAM_0 = "0,0,0,0,0,1000,0,0"

CE_MIX_PARAM_0 = "39,150,170,160,90,101,180,10"

# CE_MIX_PARAM_1 = "59,130,180,140,80,101,190,20"

# TXN_TYPE

# "-1" = EGEN-GENERATED MIX

# "0" = SECURITY_DETAIL

# "1" = BROKER_VOLUME

# "2" = CUSTOMER_POSITION

# "3" = MARKET_WATCH

# "4" = TRADE_STATUS

# "5" = TRADE_LOOKUP

# "6" = TRADE_ORDER

# "7" = TRADE_UPDATE

###############################################################################

#

# VM Configuration

#

# The specification defines 1 to 6 Tiles. Each Tile contans 4 Groups.

# Each Group contains 3 VMs

#

VM_GROUPS = "4"

VM_TILES = "5"

#

###############################################################################

###############################################################################

#

# Runtime Configuration

#

# RUN_ITERATION_SEC is the total runtime for all load phases. This value is

# divided by the number of phases to determine the run duration for each phase.

# RAMPUP_SEC is the number of seconds to ramp up the number of CE processes

# WARMUP_SEC is the number of seconds to run before entering the first

# measurement phase

# RAMPDN_SEC is the number of seconds to ramp down the load at the end of the

# final measurement phase before terminating the run.

RUN_ITERATION_SEC = "7200"

RAMPUP_SEC = "120"

WARMUP_SEC = "720"

RAMPDN_SEC = "300"

#

# RAMP_SLEEP_FACTOR controls the ramp-up and ramp-down transaction rate by

# adding a sleep delay between transactions. Set this value to "0" to disable.

# The best value for a linear ramp depends on the test configuration, but a

# value of "300" is likely a good starting point. Increase this value if the

# transaction rate ramps too steeply at the end of ramp-up; decrease if it ramps

# too steeply at the beginning of ramp-up.

RAMP_SLEEP_FACTOR = "0"

TIME_SYNC_TOLERANCE_MSEC = "1000"

VCE_POLL_PER_PHASE = "23"

# NUM_RUN_ITERATIONS is the number of times to run a full set of all load phases

# NUM_RUN_PHASES is the number of load phases in a single run iteration

NUM_RUN_ITERATIONS = "1"

NUM_RUN_PHASES = "10"

#

###############################################################################

###############################################################################

#

# VDriver Configuration

#

# VDriver (prime) hostname and RMI listening port

VDRIVER_RMI_HOST = "w1-tpc-vm32"

VDRIVER_RMI_PORT = "30000"

#

###############################################################################

###############################################################################

#

# VCe Configuration

#

# NUM_DRIVER_HOSTS is the number of CE *processes* (i.e. how many invocations

# of vce.jar) that you are using to drive load against the SUT.

NUM_DRIVER_HOSTS = "5"

# NUM_CE_DRIVERS is the total number of CE (threads) that you want to drive load

# against the SUT VMs. If you are using multiple DRIVER_HOSTS, you can specify

# the number of CEs to start on each host by using the indexed version of this

# key. Otherwise, the CEs per host are distributed evenly between hosts.

NUM_CE_DRIVERS = "XXX"

# Indexes for DRIVER_HOST (in NUM_CE_DRIVERS) start from 0

#NUM_CE_DRIVERS[0] = "2"

#

# Default and index-specific VCe driver hostnames and ports for RMI

# communication between processes (These let the VDriver process know where to

# contact the VCE processes to send benchmark control commands). There must be

# one host/port pair combination for each NUM_DRIVER_HOSTS (additional entries

# are ignored).

VCE_RMI_HOST = "localhost"

VCE_RMI_PORT = "31000"

# Indexes for VCE start from 0

VCE_RMI_HOST[0] = "w1-tpc-vm32"

VCE_RMI_PORT[0] = "31000"

VCE_RMI_HOST[1] = "w1-tpc-vm32"

VCE_RMI_PORT[1] = "31001"

VCE_RMI_HOST[2] = "w1-tpc-vm32"

VCE_RMI_PORT[2] = "31002"

#

VCE_RMI_HOST[3] = "w1-tpc-vm32"

VCE_RMI_PORT[3] = "31003"

#

VCE_RMI_HOST[4] = "w1-tpc-vm32"

VCE_RMI_PORT[4] = "31004"

#

# CE log file names

CE_MIX_LOG = "CE_Mix.log"

CE_ERR_LOG = "CE_Error.log"

# CE_EXIT_DELAY_SEC is the number of seconds the user wants to wait to allow

# "cleanup" before final exit. This is mostly in case there are "retries" going

# on that need to have time to time out before a final exit.

CE_EXIT_DELAY_SEC = "10"

#

###############################################################################

###############################################################################

#

# VMEE Configuration

#

# The number of VMEE processes the VDriver should talk to.

NUM_VMEE_PROCESSES = "1"

# These settings specify the host name and port number a given VMEE is

# listening on. vDriver will use these to connect to the VMEE processes. The

# values specified here must match those used on the VMEE command line

# (-rh and -rp) when starting a given VMEE process.

#

# Unindexed value - used as a default if a given indexed value is not specified.

MEE_RMI_HOST = "localhost"

MEE_RMI_PORT = "32000"

#

# Indexed values (0 to (NUM_VMEE_PROCESSES - 1) will be used if they exist).

MEE_RMI_HOST[0] = "w1-tpc-vm32"

MEE_RMI_PORT[0] = "32000"

#

#MEE_RMI_HOST[1] = "localhost"

#MEE_RMI_PORT[1] = "32001"

#

#MEE_RMI_HOST[2] = "localhost"

#MEE_RMI_PORT[2] = "32002"

#

#MEE_RMI_HOST[3] = "localhost"

#MEE_RMI_PORT[3] = "32003"

# Each VM TILE requires an MEE.

# Each VMEE process can manage 1 or more MEEs.

# MEEs are assigned in a round-robin manner across the available VMEEs.

# The following policies are supported.

#

# GroupMajor - is a group-major tile-minor distribution. For example:

# Group 1 Tile 1

# Group 1 Tile 2

# Group 1 Tile 3

# Group 1 Tile 4

# Group 2 Tile 1

# Group 2 Tile 2

# Group 2 Tile 3

# Group 2 Tile 4

# ...

#

# TileMajor - is a tile-major group-minor distribution. For example:

# Group 1 Tile 1

# Group 2 Tile 1

# Group 3 Tile 1

# Group 4 Tile 1

# Group 1 Tile 2

# Group 2 Tile 2

# Group 3 Tile 2

# Group 4 Tile 2

# ...

#

MEE_DISTRIBUTION_POLICY = "TileMajor"

# These settings specify individual MEE configuration options.

#

# MEE_TXN_HOST - host name the MEE will listen on (for connections from SUT

# SendToMarket)

# MEE_TXN_PORT - port number the MEE will listen on (for connections from SUT

# SendToMarket)

# MEE_MF_POOL - Size of the Market-Feed thread pool (should be 1 for TPCx-V)

# MEE_TR_POOL - Size of the Trade-Result thread pool (adjust this based on load)

#

# Unindexed value - used as a default if a given indexed value is not specified.

MEE_TXN_HOST = "localhost"

MEE_TXN_PORT = "30100"

MEE_MF_POOL = "1"

MEE_TR_POOL = "1"

#

# (Indexed values will be used if they exist. Add more entries for additional

# tiles.)

#

# Tile 1 Group 1

MEE_TXN_HOST[1][1] = "w1-tpc-vm32"

MEE_TXN_PORT[1][1] = "30100"

MEE_MF_POOL[1][1] = "1"

MEE_TR_POOL[1][1] = "5"

# Tile 1 Group 2

MEE_TXN_HOST[1][2] = "w1-tpc-vm32"

MEE_TXN_PORT[1][2] = "30110"

MEE_MF_POOL[1][2] = "1"

MEE_TR_POOL[1][2] = "9"

# Tile 1 Group 3

MEE_TXN_HOST[1][3] = "w1-tpc-vm32"

MEE_TXN_PORT[1][3] = "30120"

MEE_MF_POOL[1][3] = "1"

MEE_TR_POOL[1][3] = "11"

# Tile 1 Group 4

MEE_TXN_HOST[1][4] = "w1-tpc-vm32"

MEE_TXN_PORT[1][4] = "30130"

MEE_MF_POOL[1][4] = "1"

MEE_TR_POOL[1][4] = "15"

# Tile 2 Group 1

MEE_TXN_HOST[2][1] = "w1-tpc-vm32"

MEE_TXN_PORT[2][1] = "30101"

MEE_MF_POOL[2][1] = "1"

MEE_TR_POOL[2][1] = "5"

# Tile 2 Group 2

MEE_TXN_HOST[2][2] = "w1-tpc-vm32"

MEE_TXN_PORT[2][2] = "30111"

MEE_MF_POOL[2][2] = "1"

MEE_TR_POOL[2][2] = "9"

# Tile 2 Group 3

MEE_TXN_HOST[2][3] = "w1-tpc-vm32"

MEE_TXN_PORT[2][3] = "30121"

MEE_MF_POOL[2][3] = "1"

MEE_TR_POOL[2][3] = "11"

# Tile 2 Group 4

MEE_TXN_HOST[2][4] = "w1-tpc-vm32"

MEE_TXN_PORT[2][4] = "30131"

MEE_MF_POOL[2][4] = "1"

MEE_TR_POOL[2][4] = "15"

# Tile 3 Group 1

MEE_TXN_HOST[3][1] = "w1-tpc-vm32"

MEE_TXN_PORT[3][1] = "30102"

MEE_MF_POOL[3][1] = "1"

MEE_TR_POOL[3][1] = "5"

# Tile 3 Group 2

MEE_TXN_HOST[3][2] = "w1-tpc-vm32"

MEE_TXN_PORT[3][2] = "30112"

MEE_MF_POOL[3][2] = "1"

MEE_TR_POOL[3][2] = "9"

# Tile 3 Group 3

MEE_TXN_HOST[3][3] = "w1-tpc-vm32"

MEE_TXN_PORT[3][3] = "30122"

MEE_MF_POOL[3][3] = "1"

MEE_TR_POOL[3][3] = "11"

# Tile 3 Group 4

MEE_TXN_HOST[3][4] = "w1-tpc-vm32"

MEE_TXN_PORT[3][4] = "30132"

MEE_MF_POOL[3][4] = "1"

MEE_TR_POOL[3][4] = "15"

# Tile 4 Group 1

MEE_TXN_HOST[4][1] = "w1-tpc-vm32"

MEE_TXN_PORT[4][1] = "30103"

MEE_MF_POOL[4][1] = "1"

MEE_TR_POOL[4][1] = "5"

# Tile 4 Group 2

MEE_TXN_HOST[4][2] = "w1-tpc-vm32"

MEE_TXN_PORT[4][2] = "30113"

MEE_MF_POOL[4][2] = "1"

MEE_TR_POOL[4][2] = "9"

# Tile 4 Group 3

MEE_TXN_HOST[4][3] = "w1-tpc-vm32"

MEE_TXN_PORT[4][3] = "30123"

MEE_MF_POOL[4][3] = "1"

MEE_TR_POOL[4][3] = "11"

# Tile 4 Group 4

MEE_TXN_HOST[4][4] = "w1-tpc-vm32"

MEE_TXN_PORT[4][4] = "30133"

MEE_MF_POOL[4][4] = "1"

MEE_TR_POOL[4][4] = "15"

# Tile 5 Group 1

MEE_TXN_HOST[5][1] = "w1-tpc-vm32"

MEE_TXN_PORT[5][1] = "30104"

MEE_MF_POOL[5][1] = "1"

MEE_TR_POOL[5][1] = "5"

# Tile 5 Group 2

MEE_TXN_HOST[5][2] = "w1-tpc-vm32"

MEE_TXN_PORT[5][2] = "30114"

MEE_MF_POOL[5][2] = "1"

MEE_TR_POOL[5][2] = "9"

# Tile 5 Group 3

MEE_TXN_HOST[5][3] = "w1-tpc-vm32"

MEE_TXN_PORT[5][3] = "30124"

MEE_MF_POOL[5][3] = "1"

MEE_TR_POOL[5][3] = "11"

# Tile 5 Group 4

MEE_TXN_HOST[5][4] = "w1-tpc-vm32"

MEE_TXN_PORT[5][4] = "30134"

MEE_MF_POOL[5][4] = "1"

MEE_TR_POOL[5][4] = "15"

# These are used as base file names for logging purposes. Group and Tile IDs

# will be appended to these base names along with a ".log" suffix to make

# unique file names for each MEE.

MEE_LOG = "MEE_Msg"

MEE_MIX_LOG = "MEE_Mix"

MEE_ERR_LOG = "MEE_Err"

#

###############################################################################

###############################################################################

#

# VConnector Configuration

#

# Number of times to retry a failed DB transaction before reporting failure

NUM_TXN_RETRIES = "25"

# Default and index-specific VConnector hostnames and ports

VCONN_RMI_HOST = "localhost"

VCONN_RMI_PORT = "33000"

VCONN_TXN_HOST = "localhost"

VCONN_TXN_PORT = "43000"

VCONN_DSN_LABELS = "PSQL1"

VCONN_NUM_DBS = "1"

# Index-specific hostnames and ports. Add more entries for additional tiles.

# Tile 1 Group 1

VCONN_RMI_HOST[1][1] = "w1-tpcv-vm1"

VCONN_RMI_PORT[1][1] = "33000"

VCONN_TXN_HOST[1][1] = "w1-tpcv-vm1"

VCONN_TXN_PORT[1][1] = "43000"

VCONN_DSN_LABELS[1][1] = "PSQL2,PSQL3"

VCONN_NUM_DBS[1][1] = "2"

# Tile 1 Group 2

VCONN_RMI_HOST[1][2] = "w1-tpcv-vm4"

VCONN_RMI_PORT[1][2] = "33010"

VCONN_TXN_HOST[1][2] = "w1-tpcv-vm4"

VCONN_TXN_PORT[1][2] = "43010"

VCONN_DSN_LABELS[1][2] = "PSQL2,PSQL3"

VCONN_NUM_DBS[1][2] = "2"

# Tile 1 Group 3

VCONN_RMI_HOST[1][3] = "w1-tpcv-vm7"

VCONN_RMI_PORT[1][3] = "33020"

VCONN_TXN_HOST[1][3] = "w1-tpcv-vm7"

VCONN_TXN_PORT[1][3] = "43020"

VCONN_DSN_LABELS[1][3] = "PSQL2,PSQL3"

VCONN_NUM_DBS[1][3] = "2"

# Tile 1 Group 4

VCONN_RMI_HOST[1][4] = "w1-tpcv-vm10"

VCONN_RMI_PORT[1][4] = "33030"

VCONN_TXN_HOST[1][4] = "w1-tpcv-vm10"

VCONN_TXN_PORT[1][4] = "43030"

VCONN_DSN_LABELS[1][4] = "PSQL2,PSQL3"

VCONN_NUM_DBS[1][4] = "2"

# Tile 2 Group 1

VCONN_RMI_HOST[2][1] = "w1-tpcv-vm13"

VCONN_RMI_PORT[2][1] = "33001"

VCONN_TXN_HOST[2][1] = "w1-tpcv-vm13"

VCONN_TXN_PORT[2][1] = "43001"

VCONN_DSN_LABELS[2][1] = "PSQL2,PSQL3"

VCONN_NUM_DBS[2][1] = "2"

# Tile 2 Group 2

VCONN_RMI_HOST[2][2] = "w1-tpcv-vm16"

VCONN_RMI_PORT[2][2] = "33011"

VCONN_TXN_HOST[2][2] = "w1-tpcv-vm16"

VCONN_TXN_PORT[2][2] = "43011"

VCONN_DSN_LABELS[2][2] = "PSQL2,PSQL3"

VCONN_NUM_DBS[2][2] = "2"

# Tile 2 Group 3

VCONN_RMI_HOST[2][3] = "w1-tpcv-vm19"

VCONN_RMI_PORT[2][3] = "33021"

VCONN_TXN_HOST[2][3] = "w1-tpcv-vm19"

VCONN_TXN_PORT[2][3] = "43021"

VCONN_DSN_LABELS[2][3] = "PSQL2,PSQL3"

VCONN_NUM_DBS[2][3] = "2"

# Tile 2 Group 4

VCONN_RMI_HOST[2][4] = "w1-tpcv-vm22"

VCONN_RMI_PORT[2][4] = "33031"

VCONN_TXN_HOST[2][4] = "w1-tpcv-vm22"

VCONN_TXN_PORT[2][4] = "43031"

VCONN_DSN_LABELS[2][4] = "PSQL2,PSQL3"

VCONN_NUM_DBS[2][4] = "2"

# Tile 3 Group 1

VCONN_RMI_HOST[3][1] = "w1-tpcv-vm25"

VCONN_RMI_PORT[3][1] = "33002"

VCONN_TXN_HOST[3][1] = "w1-tpcv-vm25"

VCONN_TXN_PORT[3][1] = "43002"

VCONN_DSN_LABELS[3][1] = "PSQL2,PSQL3"

VCONN_NUM_DBS[3][1] = "2"

# Tile 3 Group 2

VCONN_RMI_HOST[3][2] = "w1-tpcv-vm28"

VCONN_RMI_PORT[3][2] = "33012"

VCONN_TXN_HOST[3][2] = "w1-tpcv-vm28"

VCONN_TXN_PORT[3][2] = "43012"

VCONN_DSN_LABELS[3][2] = "PSQL2,PSQL3"

VCONN_NUM_DBS[3][2] = "2"

# Tile 3 Group 3

VCONN_RMI_HOST[3][3] = "w1-tpcv-vm-31"

VCONN_RMI_PORT[3][3] = "33022"

VCONN_TXN_HOST[3][3] = "w1-tpcv-vm-31"

VCONN_TXN_PORT[3][3] = "43022"

VCONN_DSN_LABELS[3][3] = "PSQL2,PSQL3"

VCONN_NUM_DBS[3][3] = "2"

# Tile 3 Group 4

VCONN_RMI_HOST[3][4] = "w1-tpcv-vm-34"

VCONN_RMI_PORT[3][4] = "33032"

VCONN_TXN_HOST[3][4] = "w1-tpcv-vm-34"

VCONN_TXN_PORT[3][4] = "43032"

VCONN_DSN_LABELS[3][4] = "PSQL2,PSQL3"

VCONN_NUM_DBS[3][4] = "2"

# Tile 4 Group 1

VCONN_RMI_HOST[4][1] = "w1-tpcv-vm-37"

VCONN_RMI_PORT[4][1] = "33003"

VCONN_TXN_HOST[4][1] = "w1-tpcv-vm-37"

VCONN_TXN_PORT[4][1] = "43003"

VCONN_DSN_LABELS[4][1] = "PSQL2,PSQL3"

VCONN_NUM_DBS[4][1] = "2"

# Tile 4 Group 2

VCONN_RMI_HOST[4][2] = "w1-tpcv-vm-40"

VCONN_RMI_PORT[4][2] = "33013"

VCONN_TXN_HOST[4][2] = "w1-tpcv-vm-40"

VCONN_TXN_PORT[4][2] = "43013"

VCONN_DSN_LABELS[4][2] = "PSQL2,PSQL3"

VCONN_NUM_DBS[4][2] = "2"

# Tile 4 Group 3

VCONN_RMI_HOST[4][3] = "w1-tpcv-vm-43"

VCONN_RMI_PORT[4][3] = "33023"

VCONN_TXN_HOST[4][3] = "w1-tpcv-vm-43"

VCONN_TXN_PORT[4][3] = "43023"

VCONN_DSN_LABELS[4][3] = "PSQL2,PSQL3"

VCONN_NUM_DBS[4][3] = "2"

# Tile 4 Group 4

VCONN_RMI_HOST[4][4] = "w1-tpcv-vm-46"

VCONN_RMI_PORT[4][4] = "33033"

VCONN_TXN_HOST[4][4] = "w1-tpcv-vm-46"

VCONN_TXN_PORT[4][4] = "43033"

VCONN_DSN_LABELS[4][4] = "PSQL2,PSQL3"

VCONN_NUM_DBS[4][4] = "2"

# Tile 5 Group 1

VCONN_RMI_HOST[5][1] = "w1-tpcv-vm-49"

VCONN_RMI_PORT[5][1] = "33004"

VCONN_TXN_HOST[5][1] = "w1-tpcv-vm-49"

VCONN_TXN_PORT[5][1] = "43004"

VCONN_DSN_LABELS[5][1] = "PSQL2,PSQL3"

VCONN_NUM_DBS[5][1] = "2"

# Tile 5 Group 2

VCONN_RMI_HOST[5][2] = "w1-tpcv-vm-52"

VCONN_RMI_PORT[5][2] = "33014"

VCONN_TXN_HOST[5][2] = "w1-tpcv-vm-52"

VCONN_TXN_PORT[5][2] = "43014"

VCONN_DSN_LABELS[5][2] = "PSQL2,PSQL3"

VCONN_NUM_DBS[5][2] = "2"

# Tile 5 Group 3

VCONN_RMI_HOST[5][3] = "w1-tpcv-vm-55"

VCONN_RMI_PORT[5][3] = "33024"

VCONN_TXN_HOST[5][3] = "w1-tpcv-vm-55"

VCONN_TXN_PORT[5][3] = "43024"

VCONN_DSN_LABELS[5][3] = "PSQL2,PSQL3"

VCONN_NUM_DBS[5][3] = "2"

# Tile 5 Group 4

VCONN_RMI_HOST[5][4] = "w1-tpcv-vm-58"

VCONN_RMI_PORT[5][4] = "33034"

VCONN_TXN_HOST[5][4] = "w1-tpcv-vm-58"

VCONN_TXN_PORT[5][4] = "43034"

VCONN_DSN_LABELS[5][4] = "PSQL2,PSQL3"

VCONN_NUM_DBS[5][4] = "2"

#

###############################################################################

###############################################################################

#

# Group-specific Load Configuration

#

# Default values

CUST_CONFIGURED = "5000"

CUST_ACTIVE = "5000"

SCALE_FACTOR = "500"

LOAD_RATE = "2000"

INIT_TRADE_DAYS = "125"

# Group-specific values

CUST_CONFIGURED[1] = "16000"

CUST_ACTIVE[1] = "16000"

SCALE_FACTOR[1] = "500"

LOAD_RATE[1] = "2000"

INIT_TRADE_DAYS[1] = "125"

#

CUST_CONFIGURED[2] = "32000"

CUST_ACTIVE[2] = "32000"

SCALE_FACTOR[2] = "500"

LOAD_RATE[2] = "2000"

INIT_TRADE_DAYS[2] = "125"

#

CUST_CONFIGURED[3] = "48000"

CUST_ACTIVE[3] = "48000"

SCALE_FACTOR[3] = "500"

LOAD_RATE[3] = "2000"

INIT_TRADE_DAYS[3] = "125"

#

CUST_CONFIGURED[4] = "64000"

CUST_ACTIVE[4] = "64000"

SCALE_FACTOR[4] = "500"

LOAD_RATE[4] = "2000"

INIT_TRADE_DAYS[4] = "125"

#

#GROUP_PCT_DIST_PHASE[1] = "1.0"

GROUP_PCT_DIST_PHASE[1] = "0.10,0.20,0.30,0.40"

GROUP_PCT_DIST_PHASE[2] = "0.05,0.10,0.25,0.60"

GROUP_PCT_DIST_PHASE[3] = "0.10,0.05,0.20,0.65"

GROUP_PCT_DIST_PHASE[4] = "0.05,0.10,0.05,0.80"

GROUP_PCT_DIST_PHASE[5] = "0.10,0.05,0.30,0.55"

GROUP_PCT_DIST_PHASE[6] = "0.05,0.35,0.20,0.40"

GROUP_PCT_DIST_PHASE[7] = "0.35,0.25,0.15,0.25"

GROUP_PCT_DIST_PHASE[8] = "0.05,0.65,0.20,0.10"

GROUP_PCT_DIST_PHASE[9] = "0.10,0.15,0.70,0.05"

GROUP_PCT_DIST_PHASE[10] = "0.05,0.10,0.65,0.20"

# Use DB_CONN_BUFFER_PCT_GROUP to modify the initial number of connections

# opened by the CEs to each Tier A VM for each group. Use values greater than

# 1.0 to increase the number of connections (up to the theoretical maximum) and

# values less than 1.0 to decrease the number of initial connections.

DB_CONN_BUFFER_PCT_GROUP[1] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[2] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[3] = "1.0"

DB_CONN_BUFFER_PCT_GROUP[4] = "1.0"

#

###############################################################################

###############################################################################

#

# Misc Configuration Parameters

#

RESULT_DIR = "results"

LOG_DIR = "."

SORT_MIX_LOGS = "0"

SORTED_LOG_NAME_APPEND = "sorted"

LOG_SAMPLE_SEC = "30"

# VGEN_INPUT_FILE_DIR = ""

DEBUG_LEVEL = "0"

SUPPRESS_WARNINGS = "1"

FORCE_COMPLIANT_TX_RATE = "0"

CHECK_TIME_SYNC = "0"

COLLECT_CLIENT_LOGS = "0"

NUM_TXN_TYPES = "12"

# NUM_RESP_METRICS are the response-related metrics:

# 0 - success count

# 1 - success msec

# 2 - fail count

# 3 - fail msec

NUM_RESP_METRICS = "4"

# NUM_TXN_METRICS is the number of metrics created for report purposes

NUM_TXN_METRICS = "5"

TXN_METRIC[0] = "SUCCESS COUNT"

TXN_METRIC[1] = "FAIL COUNT"

TXN_METRIC[2] = "AGG RESP TIME"

TXN_METRIC[3] = "MIN RESP TIME"

TXN_METRIC[4] = "MAX RESP TIME"

CE_MIX_PARAM_INDEX = "0,1"

# BrokerVolumeMixLevel,CustomerPositionMixLevel,

# MarketWatchMixLevel,SecurityDetailMixLevel,

# TradeLookupMixLevel,TradeOrderMixLevel,

# TradeStatusMixLevel,TradeUpdateMixLevel

#CE_MIX_PARAM_0 = "0,0,0,0,0,1000,0,0"

CE_MIX_PARAM_0 = "39,150,170,160,90,101,180,10"

# CE_MIX_PARAM_1 = "59,130,180,140,80,101,190,20"

# TXN_TYPE

# "-1" = EGEN-GENERATED MIX

# "0" = SECURITY_DETAIL

# "1" = BROKER_VOLUME

# "2" = CUSTOMER_POSITION

# "3" = MARKET_WATCH

# "4" = TRADE_STATUS

# "5" = TRADE_LOOKUP

# "6" = TRADE_ORDER

# "7" = TRADE_UPDATE

BENCHMARK_SPONSOR = "{Sponsor Name}"

SPONSOR_LOGO = "sample_logo.jpg"

TESTBED_IMAGE = "sample_testbed.jpg"

# server vendor name

SERVER_VENDOR = "ABC"

# server model name

SERVER_MODEL = "Hyperia XP100"

# virtualization software vendor name

VIRT_SW_VENDOR = "Virtuosa"

# virtualization software product

VIRT_SOFTWARE = "Nirvana 10.1"

AVAILABILITY_DATE = "Dec 32, 2099"

# SUT processor-related information

PROCESSOR_COUNT = "2"

TOTAL_CORE_COUNT = "64"

TOTAL_THREAD_COUNT = "64"

PROCESSOR_NAME = "XYZ HyperFast 2121"

PROCESSOR_SPEED = "3.99GHz"

PROCESSOR_CACHE = "64MB L3"

# SUT total memory size

TOTAL_SUT_MEMORY = "512 GB"

# TPC Pricing Guide version used with this kit

TPC_PRICING_VERSION = "0.0.0"

# Database-related information

DATABASE_MANAGER = "DEF MoreSQL 1.0"

DATABASE_VM_OS = "Nirvana OS-V 1.0"

DATABASE_VM_MEMORY = "32 GB"

DATABASE_VM_VCPUS = "4"

DATABASE_INITIAL_SIZE = "555,345,678,901"

DATABASE_CUSTOMERS_CONFIGURED = "125,000"

# Hardware descriptions

SERVER_DESC[0] = "2x XYZ HyperFast 2121 Processor 3.99GHz (2/64/64)"

SERVER_DESC[1] = "16x 32GB DDR3 1866 MHz DIMMs"

SERVER_DESC[2] = "4x ABC Storage Array P123/4GB, one per DB VM"

SERVER_DESC[3] = "2x 128GB SFF SAS 15K dual-port HDD (boot)"

SERVER_DESC[4] = "8x 128GB SFF SAS 15K dual-port HDD (DB log, 2/VM)"

SERVER_DESC[4] = "2x 10Gb Ethernet (onboard)"

CLIENT_DESC[0] = "4x ABC WS123 Workstation"

CLIENT_DESC[1] = "2x XYZ KindaFast 1010 Processor 1.99GHz (2/16/16)"

CLIENT_DESC[2] = "2x 8GB PC3-8500 DIMMs"

CLIENT_DESC[3] = "2x 128GB SFF SAS 15K HDD"

CLIENT_DESC[4] = "2x 1Gb Ethernet (onboard)"

STORAGE_DESC[0] = "4x ABC D9000 Disk Enclosure (one per DB VM)"

STORAGE_DESC[1] = "32x ABC 512GB SFF SLC SATA 2.5-inch SSD, 8 per enclosure"

STORAGE_DESC[2] = "Priced: 24x 512GB 15K SFF HDD"

NETWORK_DESC[0] = "ABC LinkUp E9000 24-port 1/10 Network Switch"

NETWORK_DESC[1] = "8x 1Gb ports used, 2x 10Gb ports used"

# This pricing data section is commented out in the expectation that users will

# opt for filling out the (optional) pricing spreadsheet and converting that